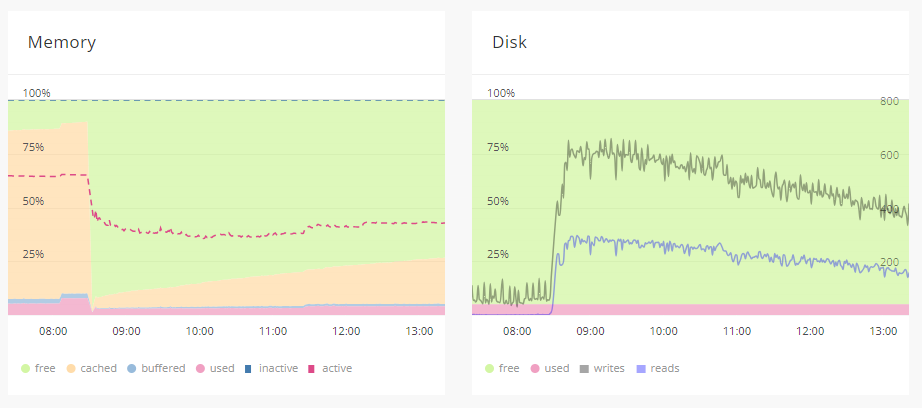

Logs play a crucial role in monitoring and maintaining servers, particularly when troubleshooting issues. In the past month, one of my projects began exhibiting unusual behavior. The server started serving static files selectively, occasionally returning a 404 error. This project has been rapidly expanding, with the disk storage approaching one hundred gigabytes and monthly traffic in the tens of terabytes. The number of files, including heavy hi-rez images, PDFs, and videos, has multiplied over time. Similarly, the number of concurrent users accessing thousands of pages on the website simultaneously has grown significantly.

My initial suspicion was a lack of server resources, particularly concerning the growth of buff/cache. To assess this, I used the following command:

free -h

The output indicated that cached memory was consuming nearly 90% of available RAM. While this isn't necessarily problematic - it speeds up access to frequently used data - the system can quickly free up cached memory when applications require more memory.

You might wonder, "How can I trust the server to know when to release cached memory?" Well, the Linux kernel employs a sophisticated algorithm to manage the file system cache (buff/cache) efficiently. When the system needs to free up memory, the kernel uses a combination of heuristics and algorithms to decide which files to evict from the cache. Files that haven't been accessed recently or are unlikely to be accessed in the near future are candidates for eviction.

Given this, I decided to reboot Linux rather than tamper with the kernel. Rebooting clears the cache as part of the shutdown process, resulting in a clean slate for cached data in memory.

Upon restarting the server, the cache was cleared, but the issue persisted. The server began actively using the disk instead of the cache.

But embedded files, such as static images, were lost from the pages. It appeared that Nginx was reluctant to serve the files to end-users.

In environments where both Apache and Nginx are utilized, Nginx typically serves as a reverse proxy for Apache. Nginx receives incoming requests from clients (real users and bots) and forwards them to Apache or serves them directly, depending on the request type and configuration.

My next step was to examine the Nginx and Apache logs, usually found in /var/log/nginx/ and /var/log/apache2/ respectively. The Nginx logs revealed an "alert" message saying "Too many open files":

[alert] 2303#0: *365029 socket() failed (24: Too many open files) while connecting to upstream

This issue is common as a project grows, indicating that the current configuration is beginning to encounter problems. The first step is to check and adjust the default configuration settings or server limits, if possible.

To address the problem, I took the following steps:

1. Obtained the Nginx process ID using:

ps aux | grep nginx

2. Checked the limits using:

cat /proc/(process ID)/limits

The output showed:

Limit Soft Limit Hard Limit Units Max open files 1024 4096 files

3. This limit was insufficient, so I proceeded to adjust the limits in the /etc/security/limits.conf file, which is used in Linux systems to set limits on system resources:

* soft nofile 4096 * hard nofile 8192

To verify the changes, I ran:

tail -n4 /etc/security/limits.conf

4. I also added fs.file-max = 70000 to the /etc/sysctl.conf file (you can check the current value with 'sysctl fs.file-max') and applied the changes with:

sysctl -p

This allowed me to modify the kernel's behavior without rebooting the system.

5. Added 'worker_rlimit_nofile 8192;' to '/etc/nginx/nginx.conf' file.

6. Also I found that the number of worker_processes in the config file was set to 1. I updated it to 'auto'. ;))

After restarting Nginx with 'systemctl restart nginx', the problem was resolved.

Voila! The problem is gone!

However, with the expected increase in traffic and buffered/cached data, I anticipate needing to scale up resources and adjust kernel and Nginx server settings again in the future.

Good luck!

May all your endeavors be successful, and may your code always run smoothly.

Best Regards,

Artem